In a previous post I briefly discussed the difference in RTKLIB between the “Continuous” mode and “Fix-and-Hold” mode for handling integer ambiguity resolution. In this post, I’d like to dig a little deeper into how fix-and-hold works and discuss some of its advantages and disadvantages, then introduce a variation I call “Fix-and-Bump”.

Each satellite has a state in the kalman filter to estimate it’s phase-bias. The phase-bias is the number of carrier-phase cycles that needs to be added to the carrier-phase observation to get the correct range. By definition, the actual phase-biases must all be integers, but their estimates will be real numbers due to errors and approximations. Each epoch, the double-difference range residuals are used to update the phase-bias estimates. After these have been updated, the integer ambiguity resolution algorithm evaluates how close the phase-bias estimates (more accurately, the differences between the phase-bias estimates) are to an integer solution. In general, the closer the estimates are to an integer solution, the more confidence we have that the solution is valid. This is because all the calculations are done with real numbers and there is no inherent preference in the calculations towards integer results.

It is important to understand that the validation process (integer ambiguity resolution) used to determine if the result is high quality (fixed) or low quality (float) is based entirely on how far it deviates from a set of perfect integers and not at all on any inherent accuracy. It doesn’t matter how wrong a result may be, if it is all integers, then the integer ambiguity resolution will deem it a high quality solution. We rely on the fact that it is very unlikely that an erroneous solution will align precisely to any set of integers.

Therefore, for the fixed solution vs float solution distinction to be reliable, it is key that we don’t violate the assumption that there is no inherent preference in the calculations toward integer results. We can use our extra knowledge that the answer should be a set of integers either to improve our answer or to verify our answer, but it is not really valid to do both. Unfortunately, that is exactly what fix-and-hold does. It uses the difference between the fixed and float solutions to push the phase-bias estimates toward integer values, then uses integer ambiguity resolution to determine how good the answer is based on how close to integers it is. This will usually improve the phase-bias estimates since it is very likely the chosen integers are the correct actual biases, but it will always improve the confidence in the result as determined by the integer ambiguity resolution, even when the result is wrong. Thus we have effectively short-circuited the validation process by adding a preference in the calculations for integer results.

This does not mean fix-and-hold is a bad thing, in fact most of the time it will improve the solution. We just need to be aware that we have short-circuited the validation process and have compromised our ability to independently verify the quality of the solution. It also means that it is easy to fool ourselves into thinking that fix-and-hold is helping more than it really is. For example, two of the metrics I have been using to evaluate my solutions, percent fixed solutions, and median AR ratio, are relatively meaningless once we have turned it on since they may improve regardless of the actual quality of the solution. The value of a particular solution is a combination of its accuracy and our confidence in that accuracy. Fix-and-hold gives up confidence in the solution in exchange for improvement in its accuracy.

Is it possible that fix-and-hold is too much of a good thing? Once it is turned on the phase-bias adjustments towards the integer solutions are done every epoch, tightly constraining the phase-biases and making it very difficult to “let go” of an incorrect solution. What if the fix-and-hold adjustments were done only for a single epoch when we first meet the enabling criteria, then turned off not used again until we lose our fix and meet the criteria again? Instead of “holding” on to the integer solution after a fix, we “bump” the solution in the right direction, then let go. I will call this “Fix-and-Bump”. Note that the “cheating” effect of adjusting the biases will be retained in the phase-bias states so turning off fix-and-hold is not enough to immediately re-validate the integer ambiguity resolution. I suspect however that erroneous fixes will not be stable and without the continuous adjustments from fix-and-hold, they will drift away from the integer solutions fairly quickly.

A good analogy might be an electric motor in a circuit with a 5 amp fuse and an 8 amp starting current. Short-circuiting the fuse (fix-and-hold) will make the motor run but a malfunction (erroneous fix) after the motor has started could cause a fire. Short-circuiting the fuse just long enough to start the motor (fix-and-bump) would also make the motor run but would be much safer in the case of a malfunction (erroneous fix) after the motor has started.

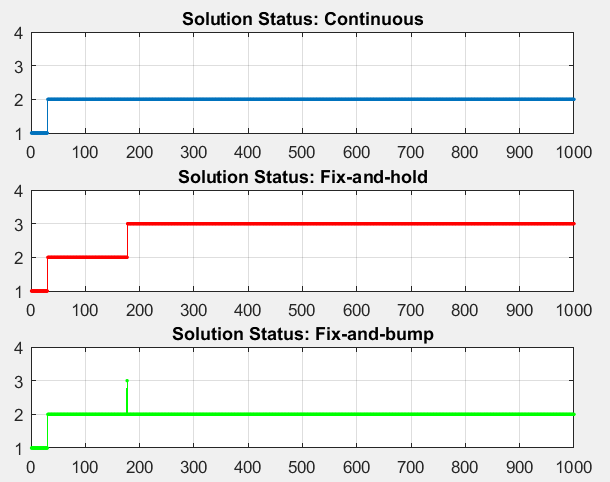

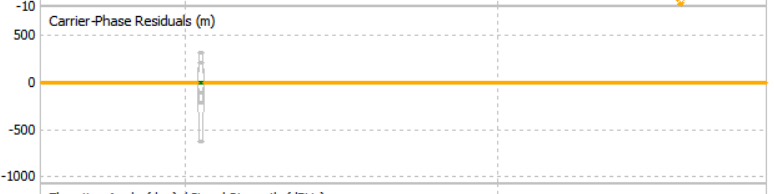

Below I have plotted the fractional part one of the phase-bias estimate differences for the three cases. The discontinuity at 180 seconds is where fix-and-hold and fix-and-bump were enabled. The plot on the right is just a zoomed-in version of the plot on the left.

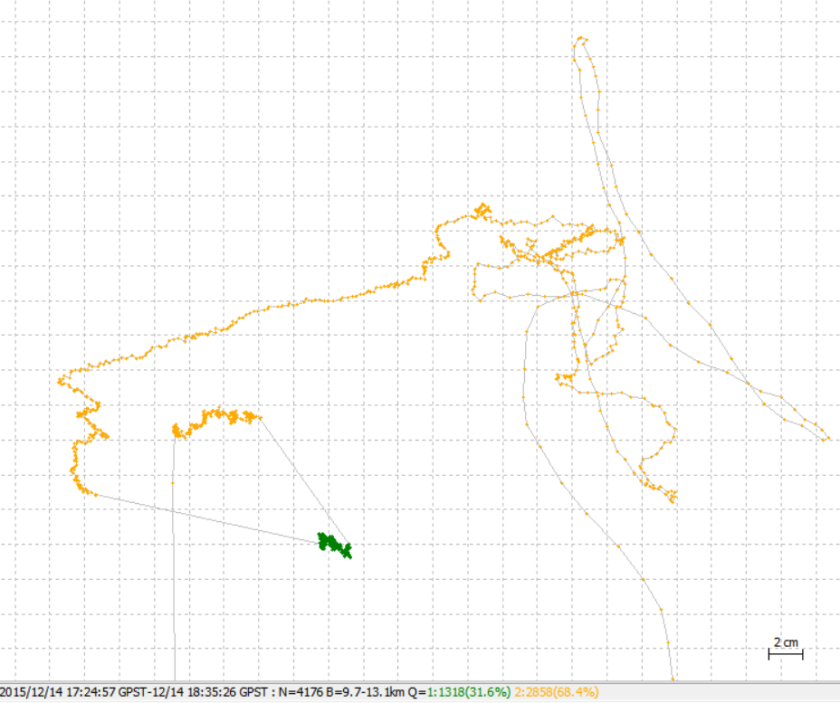

Here is the solution stats for each run. (1=float, 2=fix,3=hold)

As you can, even a single adjustment (fix-and-bump) gives us an estimate of the phase-bias that is very close to what we get if we make adjustments every epoch (fix-and-hold). This supports the idea that it may be possible to get most of the benefit of fix-and-hold while avoiding some of the downside.

At this point, this is more of a thought exercise, than a proposal to change RTKLIB but I think it may be worth considering. I hope to evaluate this change further when I have more data sets to look at.

I will use this feature though in my next post, though, which is one reason I’ve devoted a fair bit of time to it here. In that post I will extend fix-and-bump to the GLONASS satellites. Instead of using the feedback short-circuit path (as I’ve called it) to improve the phase-bias estimates, I will use it to estimate the inter-channel biases, then enable GLONASS integer ambiguity resolution. Not having the inter-channel bias values is what currently prevents integer ambiguity resolution (gloarmode) for the GLONASS satellites from working. Fix-and-bump should provide better evaluation of the quality of the combined GPS/GLONASS solutions. That’s enough for now … I’ll leave the rest to the next post.

Note: Readers familiar with the solution algorithms will notice that I have left out certain details and that some of my statements are not quite precisely correct. For the most part this was done intentionally to keep things focused but I believe the gist of the argument is valid even when all the details are included. Please let me know if you think I have left anything important out.